- Uncovering AI

- Posts

- 📸 Sora 2 Tightens Rules and Opens the Door for Creators

📸 Sora 2 Tightens Rules and Opens the Door for Creators

Nvidia builds trillion-dollar AI factories, OpenAI reshapes Sora for creators, Samsung’s tiny model outthinks giants, and ChatGPT takes a personal turn.

My fellow AI explorers

Nvidia became the world’s most valuable company, gold hit $4,060 an ounce, and yet, AI is the real gold rush. Jensen Huang is now steering a $4.5 trillion empire at the center of a $2 trillion AI future. Open AI tightened Sora’s copyright rules and opened its API floodgates, while a 7M-parameter model from Samsung quietly beat some of the world’s top LLMs at reasoning.

In today’s edition:

💰 Nvidia’s $4.5T reign and the coming “AI factory” era

🎬 Sora 2’s new rules and what it means for creators

🧠 A 7M-parameter upstart that just outreasoned Gemini 2.5 Pro

Must See AI Tools

💰 Payman: AI That Pays Humans. Over 10,000+ signed up for the beta

💫 SubMagic: An AI tool that edits short-form content for you! (Get 10% off using code “uncoverai” at checkout)

🎤 11Labs: #1 AI voice generator (Click Here to get 10,000 free credits upon signing up!)

🤖 ManyChat: Automate your responses & conversations on IG, FB and more! (Click Here to get first month for free)

🎙️ Syllaby: The only social media marketing tool you’ll ever need - powered by AI! (Get 25% off the first month or any annual plan with code “UNCOVER” at checkout)

Open AI

API pricing, tighter moderation, and the road to creator economy

Sora 2 adds an API (~$0.10/sec of generation) and updates copyright controls after the initial anything-goes frenzy.

Key takeaways:

API = velocity: programmatic pipelines for storyboards, pre-viz, ad variants.

Rights holder controls: more stringent IP handling; expect denials where shows/celebs used to pass.

Monetization hints: OpenAI teases ways for rights holders/creators to participate (details pending).

Reality check for teams: budgeting by seconds makes iteration strategy crucial. You’ll storyboard tighter, use reference frames, and chain edits to avoid starting from scratch.

🔮 Prediction: Sora will bifurcate: brand-safe commercial mode (clearable assets, provenance, watermarks) and experimental creator mode (looser, but sandboxed). Tools that manage asset rights state will become table stakes in video pipelines.

Founders…

🤖 Need an AI Agency to Help Your Business Implement AI Solutions?

Our preferred partner Align AI provides you with an expert AI and Automation implementation team to add 10-40+ hours of increased productivity per employee and achieve your goals faster.

Jensen Huang

Gold may be flirting with $4,060 an ounce, but the real rush isn’t underground—it’s in silicon. Global spending on AI is projected to hit $1.5 trillion this year, and if the trend continues, $2 trillion by 2026. At the center of it all? Jensen Huang, the visionary CEO of Nvidia, which just became the world’s most valuable company with a $4.5 trillion market cap.

In a new interview on CNBC, Huang outlined how Nvidia’s early bets on full-stack AI infrastructure—chips, networking, and software—cemented its dominance, and why the company’s partnership with OpenAI signals a new phase for both sides.

Here’s what stood out:

Direct partnership with OpenAI: For the first time, Nvidia will sell systems directly to OpenAI—beyond Azure and Oracle Cloud intermediaries.

AI factories on the horizon: Huang predicts each “gigawatt-scale AI factory” will require $50–60 billion in infrastructure, with Nvidia supplying everything from GPUs to networking and software.

Equity opportunities: Nvidia will have the chance to invest in OpenAI alongside other backers as the company moves toward self-hosted hyperscaling.

When asked about AMD’s new OpenAI deal, Huang didn’t hold back—calling it “clever but surprising,” noting that AMD gave away 10% of its company before building its next chip line. Nvidia’s edge, he stressed, comes from controlling the entire AI stack, allowing for yearly leaps in performance while Moore’s Law stagnates.

🔮 Prediction: Nvidia isn’t just selling chips—it’s quietly building the foundation for an AI-powered industrial revolution. Expect “AI factories” to become the next trillion-dollar infrastructure category, powering not just data centers, but the entire digital economy.

AI SaaS Founders

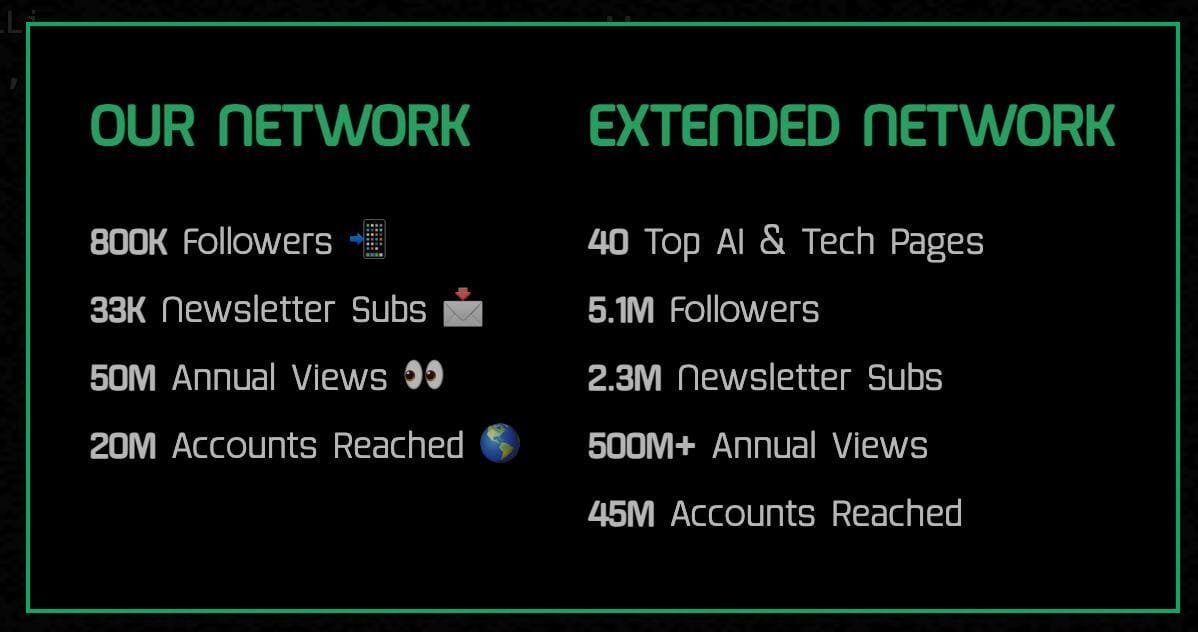

🚨Want Millions of Impressions For Your AI SaaS, Done For You?

At uncovernews.co, we specialize in getting AI SaaS products the attention they deserve through strategic influencer marketing campaigns designed to drive millons of impressions at the fraction of the cost!

Get Your AI Startup’s News or Product In Front of Millions Quickly

LLM’s

Small model, big brain. A single-author paper from Samsung Montréal introduces the Tiny Recursive Model (TRM)—a 7M-parameter, 2-layer network that iteratively refines its own answers and posts ~45% on ARC-AGI-1 and ~8% on ARC-AGI-2, surpassing several frontier LLMs (e.g., Gemini 2.5 Pro, o3-mini, DeepSeek-R1) on these hard reasoning benchmarks—with <0.01% of their size.

What’s new (and why it matters):

Recursion over scale: TRM replaces heavyweight chain-of-thought + massive parameter counts with a light loop: propose → critique → revise → repeat. That “virtual depth” boosts generalization on symbolic/visual puzzles.

Clean break from HRM: It simplifies the earlier Hierarchical Reasoning Model (HRM) (two interacting RNNs with deep supervision), matching or beating it while being smaller and easier to reason about.

SOTA-ish puzzle skills: Reported jumps include Sudoku-Extreme 55%→87% and Maze-Hard 75%→85%, alongside the ARC gains above. Code and results are public.

How it works (at a glance):

Keep two memories: the current guess and a reasoning trace.

Unroll recursion a fixed number of steps; each step updates both.

Stop when the refinement converges or time’s up—return the best candidate.

This yields “depth via loops,” not layers—avoiding overfitting that appeared when stacking more layers.

Context & comparisons:

HRM showed that tiny models with deep supervision and multi-timescale recurrences can crush puzzles like Sudoku and mazes; TRM argues you don’t need the hierarchies—just an efficient recursive updater.

On ARC-AGI, TRM’s ~45/8 (v1/v2) beats many large LLMs under the paper’s eval protocol; only specialized “thinking” behemoths remain ahead—at vastly higher cost.

🔧 Try it / read it:

Paper (arXiv) • Code (Samsung SAIL Montréal) • Explainers (Marktechpost, Forbes)

🔮 Prediction: We’re seeing a new scaling axis: recursion depth at test time. Expect hybrid systems that pair small, specialized solvers like TRM with larger planners—getting frontier-level reasoning on devices without frontier-level footprints.

30-Second AI Play

🤖 Make ChatGPT answers feel like they know you (without chaos)

You’ve got four knobs. Use them deliberately:

1) Memories (fastest start):

Settings → Personalization → turn Memories on. As you chat, it auto-saves relevant facts.

Do: let it learn stable preferences (tone, formats, recurring goals).

Don’t: let personal quirks bleed into work. Periodically prune.

2) Projects (best practice):

Create a Work and a Personal project.

Turn on project memories.

Add project instructions (voice, constraints, sources).

Upload evergreen files (brand guide, ICP, KPIs).

Result: each chat inherits only what’s relevant to that context.

3) Custom Instructions (manual mode):

If you hate surprises, disable Memories and paste a tidy profile (role, goals, deliverable styles).

Pro tip: list current quarterly goals and forbidden outputs (e.g., “No hashtags. Keep to 120–160 words.”).

4) GPTs (shareable presets):

Package a use-case (e.g., “Pitch Polisher”) with instructions + knowledge files.

Great for teams; weak for multi-thread org. Use Projects for ongoing work, GPTs for repeatable tasks you’ll share.

Simple recipe:

New/casual user → Memories → graduate to Projects → optionally add Custom Instructions → build/share GPTs for team workflows.

Other Relevant AI News!

🧠 Google’s Gemini “computer use” model can operate a headless browser to complete tasks end-to-end—try a hosted demo and watch it navigate, click, and fill forms in a VM.

🛰️ Perplexity Comet—their AI browser—opens to everyone for free, but early hands-on suggests it trails Chrome-level extensions from rivals in reliability; still worth a spin for research flows.

🖼️ HunyuanImage 3.0 goes open-source and hits top-tier quality across logos, comics, and photo realism—another strong push from China’s open model ecosystem (rankings).

🎞️ xAI Imagine v0.9 adds snappy text-to-video previews from infinite scroll image generations—fun for ideation, not production-ready yet.

🧩 Google Opal (no-code AI apps) expands to ~50 new countries and adds debugging—a gentle on-ramp for building lightweight agentic tools without wrangling infra.

Golden Nuggets

⚙️ Nvidia is building trillion-dollar AI factories that could become as vital as today’s energy grids.

🎬 OpenAI’s Sora 2 update adds tighter copyright rules and an API, marking a new phase for AI-generated video.

🧩 Samsung’s 7-million-parameter Tiny Recursive Model proves small models can now outreason frontier LLMs.

🌐 ChatGPT is becoming more personal, learning your context through apps, projects, and memories.

Have any feedback for us? Please reply to this email (I read them all!)

Until our next AI rendezvous,

Anthony | Founder of Uncover AI